Dive into the ethical considerations of artificial intelligence. Explore key issues like bias, transparency, privacy, and job displacement. Understand the critical need for ethical guidelines to ensure AI benefits society responsibly.

Artificial Intelligence (AI) is changing many industries and shaping our future. But, as we use AI more, we must think about its ethics.

This blog explores the key ethics of artificial intelligence. It talks about the need for data privacy and security. It also looks at how AI affects jobs and society.

Knowing these ethics is vital for developers and policymakers. It helps ensure AI is made and used responsibly.

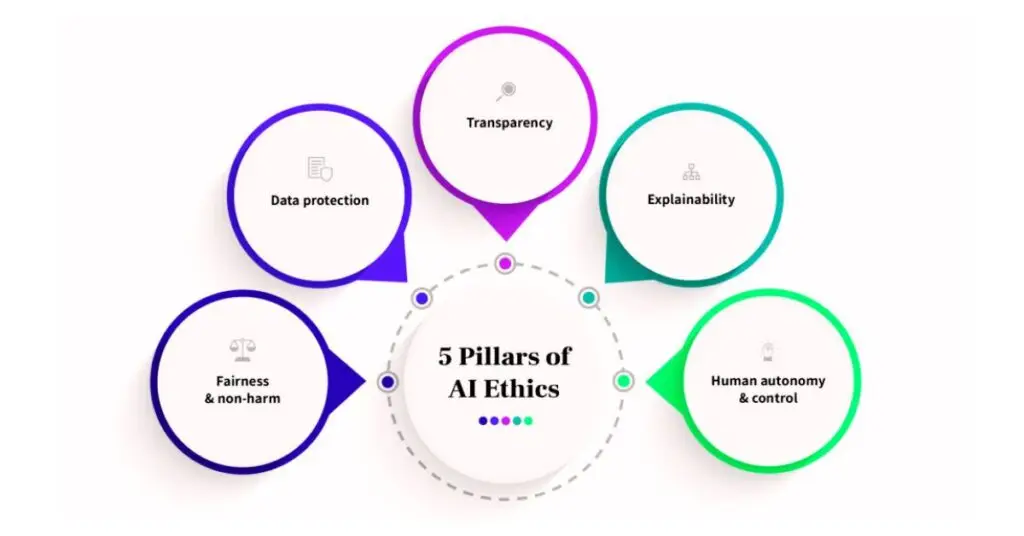

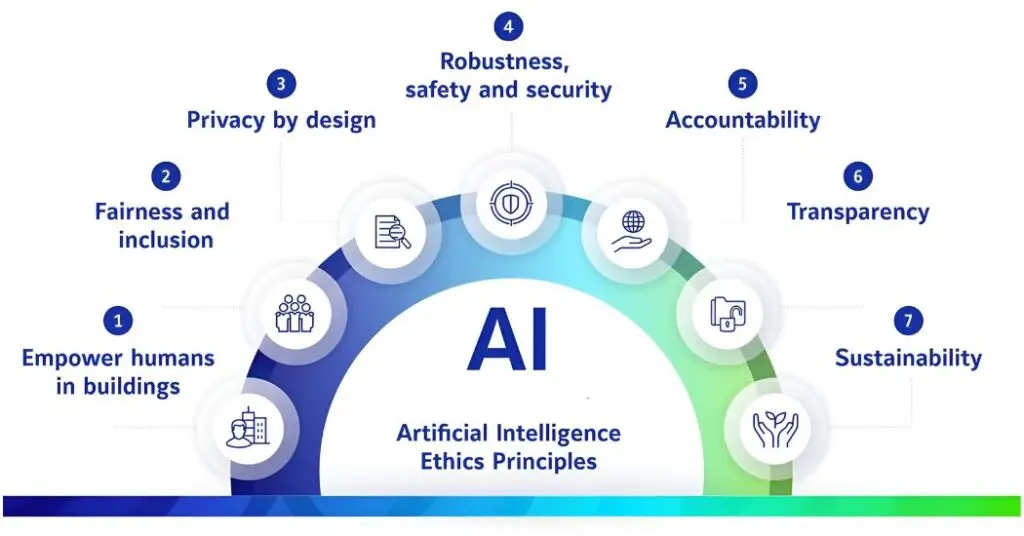

Fundamental Ethical Principles in AI

As AI grows, following key ethical principles is crucial. This ensures it’s developed and used right.

Here are the main ethics guiding AI development:

Fairness and Non-Discrimination

AI systems must treat everyone fairly and without bias. This means:

- Equal Treatment: AI algorithms should not favor or discriminate against any group. This includes race, gender, ethnicity, or other sensitive characteristics.

- Bias Mitigation: Strategies should be used to find and reduce biases in AI models. This includes using diverse training datasets and fairness-aware algorithms.

Transparency and Explainability

Being open about AI operations is key for trust and accountability. This includes:

- Openness: AI processes and decision-making criteria should be clear and understandable to users.

- Explainability: AI decisions, especially in critical areas like healthcare and finance, should be explained. This ensures users understand how outcomes are determined.

Accountability

Accountability means there’s clear responsibility for AI actions and decisions. This involves:

- Responsibility: It’s important to define who is accountable for AI system outcomes. This could be developers, companies, or other stakeholders.

- Feedback Mechanisms: Channels should be set up for users to give feedback and appeal AI decisions. This ensures continuous improvement and accountability.

Data Privacy and Security

Protecting user data is critical in AI development. This includes:

- Privacy Protection: Strong data privacy measures should be implemented. This safeguards personal information and ensures ethical data collection practices.

- Security Measures: AI systems must be secure from data breaches and cyber threats. This maintains the integrity and confidentiality of user data.

Safety and Reliability

AI systems must be safe and reliable to prevent harm. This involves:

- Safety Protocols: Strong safety practices should be developed and applied. This avoids risks and unintended consequences.

- Reliability Testing: AI systems should be thoroughly tested in controlled environments before deployment. Their performance should also be monitored after deployment.

By following these ethical principles, we can ensure AI is developed and used responsibly. This builds trust in AI systems and encourages their proper use in society.

Data Privacy and Security

Data privacy and security are key in ethical AI development. Ensuring AI systems handle data responsibly protects individuals’ personal information. It also builds trust in AI technologies. Here are the main considerations for data privacy and security in AI:

Importance of Data Privacy

Protecting user data is essential for trust and legal compliance. This involves:

- Ethical Data Collection: AI systems should only collect necessary data. Avoiding excessive or irrelevant information is important.

- User Consent: Users should be informed about data collection and usage. Explicit consent should be obtained before collecting personal data.

- Anonymization: Data should be anonymized to protect user identities. This makes it hard to trace back to individual users while still allowing data analysis.

Security Measures

Protecting data from breaches and cyber threats is key. This means:

- Encryption: Encrypt data in transit and at rest to stop unauthorized access. Use strong encryption to keep data safe.

- Access Controls: Limit data access based on roles and responsibilities. Only authorized people should see sensitive info.

- Regular Audits: Do regular security audits to find and fix vulnerabilities. Keep security up to date with new threats and tech.

Compliance with Regulations

Following data protection laws is vital for ethical AI. This includes:

- GDPR and CCPA Compliance: Make sure AI systems follow GDPR and CCPA. These laws protect data and user rights.

- Data Breach Response: Have a plan for data breaches. This includes telling affected users and fixing the problem.

Building Trust through Transparency

Being open about data handling builds trust. This means:

- Clear Privacy Policies: Have clear privacy policies that explain data use and protection.

- User Control: Let users control their data. They should be able to access, correct, or delete it.

AI developers can make systems that respect user rights and build trust. This ensures legal compliance and promotes ethical AI use.

Prejudice and Unfairness

Bias and discrimination in AI are big ethical worries. They can cause unfair outcomes and make existing inequalities worse. It’s important to tackle these issues for fair and just AI. Here are the main ways to reduce bias and discrimination in AI:

Sources of Bias in AI

Bias in AI comes from different places, like:

- Historical Data Bias: AI learns from old data, which might have biases. This can lead to unfair results if not fixed.

- Algorithmic Bias: Bias can also come from the algorithms themselves. If they’re not designed for fairness, they can be discriminatory.

Mitigation Strategies

To lessen bias and discrimination in AI, developers can try several things:

- Diverse Training Datasets: Use diverse datasets to train AI models. This reduces bias by learning from many experiences.

- Fairness-Aware Algorithms: Create and use algorithms that aim for fairness. These can spot and fix biases during training.

- Regular Audits: Regularly check AI systems for biases. This involves always watching AI outputs and making fairness improvements.

Transparency and Accountability

Being open and accountable is key to tackling AI bias:

- Explainability: Make sure AI systems can explain their decisions clearly. This helps spot biases and understand how decisions are made.

- Accountability Mechanisms: Set up clear ways to hold developers and organizations accountable for AI outcomes. This includes processes for users to report and challenge biased decisions.

Ethical Guidelines and Regulations

Following ethical guidelines and regulations can reduce bias and discrimination in AI:

- Ethical Frameworks: Use established ethical frameworks for fair AI development. These include fairness, transparency, and accountability.

- Compliance with Laws: Make sure AI systems follow laws against discrimination and bias. This includes anti-discrimination and data protection laws.

This approach makes AI systems fairer and more just. It also builds trust and confidence among users.

Impact on Employment and Society

AI technologies have big effects on jobs and society. While AI brings many benefits, it also brings challenges. Here are the main points to understand AI’s impact:

Job Displacement and Creation

AI can both take away and create jobs. This means:

- Automation of Tasks: AI can automate tasks, leading to job loss in some areas. For example, jobs in manufacturing and administration are at risk.

- New Job Opportunities: On the other hand, AI creates new jobs in AI development, data analysis, and cybersecurity. These jobs need new skills and offer career growth.

Workforce Transition

To lessen job loss, supporting workers is key. This includes:

- Reskilling and Upskilling: Offer training to help workers get new skills for the AI job market. This includes technical and soft skills.

- Career Support Services: Provide career counseling and job placement to help workers find new jobs.

Social Implications

AI’s role in society has broader implications. These include:

- Economic Inequality: AI’s benefits might not be shared equally, worsening economic inequality. It’s important to ensure fair access to AI’s benefits.

- Ethical Use of AI: AI must be used ethically to avoid negative impacts. This means addressing biases and respecting human rights.

Policy and Regulation

Effective policies and regulations are needed for AI’s societal impact. This involves:

- Regulatory Frameworks: Create and enforce rules for AI’s ethical use. This includes data protection laws and guidelines for transparency and accountability.

- Public Engagement: Involve the public in AI discussions. This ensures AI development aligns with societal values and addresses concerns.

By understanding AI’s impact on jobs and society, we can use AI’s benefits while addressing its challenges. This promotes a fair and inclusive society and ensures AI is developed and used responsibly.

Legal and Regulatory Frameworks

AI technologies need strong legal and regulatory frameworks for responsible and ethical use. Here are the main points for effective legal and regulatory frameworks for AI:

Current Regulations

Existing laws provide a base for AI governance. This includes:

- Data Protection Laws: Laws like GDPR in Europe and CCPA in the United States set data privacy standards. They require responsible and transparent data handling.

- Anti-Discrimination Laws: Laws against discrimination are crucial for fair AI systems. Compliance with these laws prevents AI bias and discrimination.

Future Directions

As AI grows, new rules will be needed. This means:

- Proactive Policy Development: Policymakers need to think ahead. They should make rules for new AI risks and ethics. This includes setting standards for AI transparency, accountability, and fairness.

- International Collaboration: AI is worldwide, so countries must work together. This helps make common rules and best practices for AI.

Role of Policymakers

Policymakers are key in shaping AI’s ethics. This includes:

- Stakeholder Engagement: It’s important to involve many groups in making rules. This includes experts, academics, and civil society. It makes sure rules are well-informed and fair.

- Public Awareness: Educating people about AI is crucial. It builds trust and informs about AI’s benefits and risks.

Ethical Guidelines

Legal rules are not enough. Ethical guidelines are also needed. This includes:

- AI Principles: Using principles like fairness, transparency, and accountability is important. These principles help ensure AI is used ethically.

- Best Practices: Following best practices, like doing impact assessments, is key. It ensures AI is developed responsibly.

By following these guidelines, we can make sure AI is developed and used ethically. This builds trust and supports AI’s growth.

Ethical AI in Practice

Using ethical AI means following rules and guidelines. Here are important considerations and best practices:

Real-world examples show how ethical AI works:

- Healthcare AI: AI in healthcare must protect patient privacy and data. AI tools for diagnosing should be clear about their decisions and protect patient data.

- Financial Services: AI in finance must avoid biases. Regular checks and diverse training data help ensure fairness and accuracy.

Best Practices

Following best practices for ethical AI is key. It builds trust and ensures responsible use:

- Transparency and Disclosure: Explain how AI systems work and make decisions. Provide clear explanations to users.

- User-Centric Design: Design AI with users in mind. Involve diverse groups during development to meet their needs.

- Continuous Monitoring and Evaluation: Regularly check AI systems for bias and fairness. Use feedback to improve the system.

Ethical Guidelines

Following ethical guidelines is important for responsible AI:

- Fairness: AI systems must treat everyone fairly. Use algorithms and data that avoid biases.

- Accountability: Make sure someone is responsible for AI decisions. Provide ways for users to report unfair decisions.

- Privacy and Security: Protect user data with strong privacy and security measures. Keep data safe from unauthorized access.

Promoting an Ethical Culture

Creating an ethical culture in organizations is key for responsible AI development:

- Ethical Training: Give training to developers and stakeholders on ethical AI. This ensures everyone knows ethics is crucial.

- Ethical Review Boards: Set up ethical review boards for AI projects. They ensure projects follow ethical guidelines.

By following these best practices, organizations can make AI that is fair and transparent. This builds trust and supports AI’s growth.

Conclusion

As AI gets better, we must focus on ethics. Following principles like fairness and transparency is important. This way, AI benefits everyone.

Protecting data and avoiding bias are key steps. Strong laws and guidelines help too. They give a solid base for ethical AI.

By following these principles, we build trust in AI. Developers, policymakers, and users all play a part in AI’s future. Let’s make AI fair and beneficial for all.

Thank you for reading! If you found this helpful, share it and join the conversation on ethical AI.

FAQ: Ethical Considerations in Artificial Intelligence Development

What are the main ethical principles in AI development?

The main principles are fairness, transparency, accountability, data privacy, and security. These ensure AI is developed and used responsibly, building trust and preventing harm.

How can AI developers mitigate bias in AI systems?

Developers can reduce bias by using diverse datasets and fairness-aware algorithms. Regular audits help find and fix biases in AI models.

Why is data privacy important in AI development?

Data privacy is key to protect personal info and meet legal standards. It builds trust in AI. Ethical data collection and strong security are essential.

What impact does AI have on employment?

AI can replace and create jobs. It automates tasks but also opens new fields like AI development. Supporting workers through reskilling is crucial.

What role do regulations play in ethical AI development?

Regulations are vital for ethical AI. They set standards for data protection, prevent discrimination, and ensure transparency. This builds trust in AI.ty, helping to build trust in AI technologies.