What is the History and Evolution of AI? Explore the fascinating journey of artificial intelligence from its early beginnings to modern advancements. Learn how AI has evolved over the decades and its impact on technology and society.

Artificial Intelligence (AI) has transformed from a speculative concept in ancient myths to a pivotal technology shaping our modern world. Understanding its history and evolution is crucial for grasping its current capabilities and future potential. This blog post delves into the fascinating journey of AI, from its philosophical roots to the cutting-edge advancements of today.

By exploring the milestones and challenges in AI’s development, we can appreciate the strides made and the hurdles overcome. Whether you’re a tech enthusiast, a student, or a professional in the field, this comprehensive overview will provide valuable insights into the dynamic world of artificial intelligence.

Ancient Philosophical Roots

The concept of artificial intelligence dates back to ancient times, when myths and philosophical ideas laid the groundwork for modern AI. Greek mythology is rich with stories of intelligent automata, such as Talos, a giant bronze man created by Hephaestus to protect Crete. These myths reflect the early human fascination with creating lifelike machines.

Greek philosophers like Aristotle and Plato also pondered the nature of human cognition and reasoning. They explored the idea that human thought could be broken down into logical steps, similar to a mathematical process. This line of thinking laid the foundation for symbolic AI, where reasoning is represented through symbols and rules.

In addition to Greek mythology, other ancient civilizations, such as the Egyptians and Chinese, had their myths and legends about artificial beings. These stories highlight a universal human desire to understand and replicate intelligence.

By examining these ancient philosophical roots, we can see that the quest to create intelligent machines is not a modern phenomenon but a timeless pursuit that has evolved over millennia.

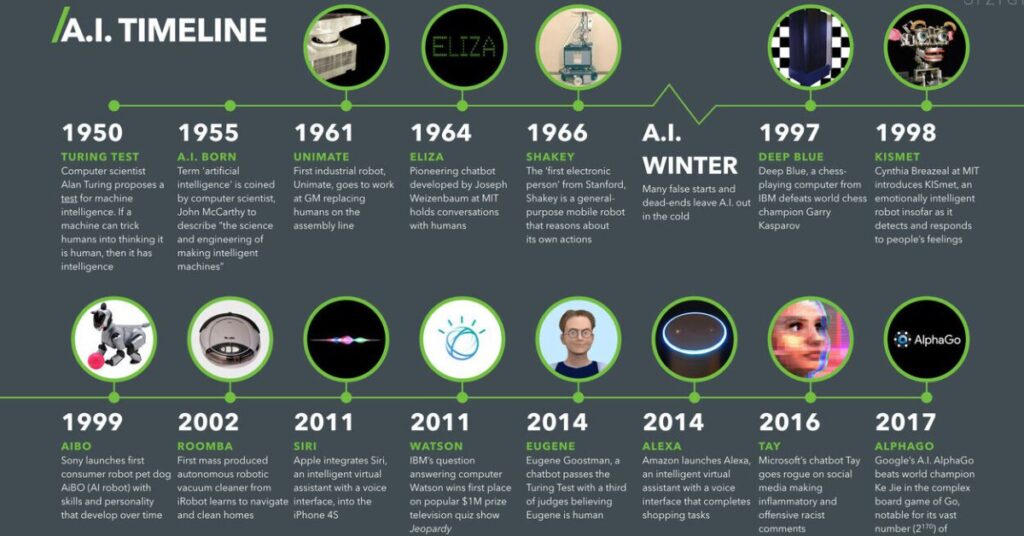

The Birth of Modern AI (1950s-1960s)

The modern era of artificial intelligence began in the 1950s, marked by significant theoretical and practical advancements. One of the pivotal figures was Alan Turing, a British mathematician who proposed the idea of a “universal machine” capable of performing any computation. Turing’s work laid the foundation for the concept of machine intelligence.

In 1956, the Dartmouth Conference, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, is often cited as the birth of AI as a field. This conference brought together researchers to discuss the possibility of creating machines that could “think” and simulate human intelligence. The term “artificial intelligence” was coined during this event, setting the stage for future research and development.

Early AI programs, such as the Logic Theorist (1955) and the General Problem Solver (1957), demonstrated that machines could perform tasks that required human-like reasoning. These programs used symbolic logic to solve problems, showcasing the potential of AI.

The 1960s saw further advancements with the development of the first AI languages, like LISP, created by John McCarthy. LISP became the standard programming language for AI research due to its flexibility and powerful features. Additionally, the decade witnessed the creation of the first neural networks, inspired by the human brain’s structure and function.

By the end of the 1960s, AI had established itself as a promising field, with researchers optimistic about its future potential. However, the journey was just beginning, with many challenges and breakthroughs yet to come.

The First AI Winter (1970s)

The 1970s marked a challenging period for artificial intelligence, known as the first AI winter. This era was characterized by a significant decline in funding and interest in AI research due to unmet expectations and technological limitations.

During the 1960s, AI had garnered substantial enthusiasm, with early programs showing promise. However, by the early 1970s, it became evident that AI systems were not progressing as quickly as anticipated.

Projects like machine translation and speech recognition failed to deliver practical results, leading to widespread disappointment.

Several factors contributed to this downturn. One major issue was the overestimation of AI’s capabilities. Researchers and the media had set high expectations, but the technology of the time, particularly in terms of computational power and memory, was insufficient to meet these goals. Additionally, the Lighthill Report, published in 1973, criticized the lack of progress in AI research and recommended reducing funding.

As a result, many AI projects were abandoned, and funding agencies, including DARPA, cut back on their support for AI research. This period of reduced funding and interest lasted until the early 1980s when new approaches and technologies began to revive the field.

The first AI winter serves as a reminder of the importance of managing expectations and the need for continuous innovation and realistic goal-setting in the development of advanced technologies.

Expert Systems and Renewed Interest (1980s)

The 1980s marked a resurgence in artificial intelligence, primarily driven by the development and success of expert systems. These systems were designed to mimic the decision-making abilities of human experts in specific domains.

Expert systems are computer programs that use a knowledge base of human expertise to solve complex problems. One of the earliest and most notable examples is DENDRAL, developed in the 1960s to assist chemists in identifying organic molecules. However, it was in the 1980s that expert systems gained significant traction.

MYCIN, another pioneering expert system, was developed to diagnose bacterial infections and recommend treatments. It demonstrated the potential of AI in practical applications, leading to increased interest and investment in the field. These systems used rule-based logic to simulate the reasoning process of human experts, making them valuable tools in various industries.

The commercial success of expert systems in the 1980s was notable. They were adopted by businesses for tasks such as medical diagnosis, financial forecasting, and troubleshooting complex machinery. Companies like Digital Equipment Corporation (DEC) and General Electric (GE) implemented expert systems to enhance their operations.

This period also saw the development of second-generation expert systems, which incorporated probabilistic reasoning to handle uncertainty and improve decision-making. These advancements helped solidify AI’s value in real-world applications and renewed interest in the field.

The success of expert systems in the 1980s demonstrated the practical benefits of AI, leading to increased funding and research. This era laid the groundwork for future advancements in AI, setting the stage for the development of more sophisticated technologies.

The Second AI Winter (Late 1980s-1990s)

The late 1980s to mid-1990s marked the second AI winter, a period characterized by reduced funding and interest in artificial intelligence research. This downturn was primarily due to the limitations of expert systems, which had failed to meet the high expectations set during their initial success in the 1980s.

Expert systems, while initially promising, encountered significant practical and technical limitations. They struggled with handling unexpected inputs and required extensive manual updates to their knowledge bases. As a result, businesses began to lose confidence in their effectiveness, leading to a decline in investment.

Another contributing factor was the collapse of the LISP machine market. LISP machines were specialized computers designed to run AI applications efficiently. However, by the late 1980s, general-purpose computers from companies like IBM and Apple had become more powerful and cost-effective, rendering LISP machines obsolete.

The Japanese Fifth Generation Computer Systems project, launched in the early 1980s, also failed to deliver on its ambitious goals. This project aimed to create computers using massively parallel processing and logic programming to achieve breakthroughs in AI. However, it fell short of expectations, further contributing to the disillusionment with AI.

The Strategic Computing Initiative, a major U.S. government-funded AI research program, also faced setbacks. Funding cuts and a shift in focus away from AI research led to a slowdown in progress.

Despite these challenges, the second AI winter provided valuable lessons. It highlighted the need for more realistic expectations and sustainable approaches to AI development. Researchers began to explore new methodologies, such as machine learning, which would eventually lead to the resurgence of AI in the late 1990s and early 2000s.

The Rise of Machine Learning (1990s-2000s)

The 1990s and 2000s were transformative decades for artificial intelligence, marked by the rise of machine learning. This period saw significant advancements in algorithms and computational power, enabling machines to learn from data and improve their performance over time.

One of the key developments was the introduction of support vector machines (SVMs) by Vladimir Vapnik and his colleagues in the early 1990s. SVMs became popular for their ability to handle high-dimensional data and perform well in classification tasks. Around the same time, decision tree algorithms gained traction, providing a simple yet powerful method for making predictions based on data.

In 1995, Leo Breiman developed the random forest algorithm, which improved model accuracy through ensemble learning. This technique combined multiple decision trees to create a more robust and accurate predictive model.

The late 1990s also saw the resurgence of neural networks, thanks to the backpropagation algorithm. This algorithm allowed multi-layer networks to learn complex patterns, leading to the development of deep learning. Researchers like Geoffrey Hinton and Yann LeCun made significant contributions to this field, paving the way for future breakthroughs.

The 2000s were characterized by the rise of big data, which provided vast amounts of information for training machine learning models. This era saw the development of more sophisticated algorithms and the increased use of graphics processing units (GPUs) to accelerate computations. In 2006, Geoffrey Hinton and Ruslan Salakhutdinov published a paper on deep belief networks, sparking renewed interest in deep learning.

These advancements laid the foundation for modern AI applications, such as image and speech recognition, natural language processing, and autonomous systems. The rise of machine learning during the 1990s and 2000s was a pivotal period that set the stage for the AI revolution we are witnessing today.

The Evolution of Big Data and Deep Learning (2010s to Present)

The 2010s marked a transformative era in data science and artificial intelligence, driven by the exponential growth of big data and advancements in deep learning technologies. This period saw significant breakthroughs that reshaped industries and revolutionized how we process and analyze information.

The Rise of Big Data

Big data refers to the vast amounts of data generated every second from various sources such as social media, sensors, and transactions. The 2010s saw an unprecedented increase in data generation, leading to the development of new tools and technologies to store, process, and analyze this information. Companies like Google, Facebook, and Amazon led the way, using big data to gain insights, improve services, and drive innovation.

Advancements in Deep Learning

Deep learning, a subset of machine learning, involves neural networks with many layers that can learn and make intelligent decisions on their own. The 2010s were pivotal for deep learning due to several key factors:

- Increased Computational Power: The advent of powerful GPUs and cloud computing made it feasible to train complex neural networks on large datasets.

- Algorithmic Innovations: Breakthroughs in algorithms, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), significantly improved the performance of deep learning models.

- Availability of Large Datasets: Publicly available datasets like ImageNet provided the necessary data to train and validate deep learning models, accelerating research and development.

Impact on Industries

The combination of big data and deep learning has had a profound impact across various sectors:

- Healthcare: AI-driven diagnostics and personalized medicine have improved patient outcomes and streamlined healthcare processes.

- Finance: Predictive analytics and algorithmic trading have transformed financial markets, enabling more accurate forecasting and risk management.

- Retail: Personalized recommendations and inventory management systems have enhanced customer experiences and operational efficiency.

- Transportation: Autonomous vehicles and smart logistics systems have revolutionized transportation, making it safer and more efficient.

Future Prospects

As we move forward, the integration of big data and deep learning is expected to continue evolving, with emerging technologies like quantum computing and edge AI further pushing the boundaries. Ongoing research and development in these fields promise to unlock new possibilities and drive innovation across all aspects of society.

the 2010s to the present have been a period of remarkable growth and innovation in big data and deep learning. These advancements have not only transformed industries but also paved the way for a future where AI and data-driven insights play a central role in shaping our world.

Current Trends and Future Directions

Artificial intelligence continues to evolve rapidly, with several current trends shaping its development and future directions. Understanding these trends is crucial for anticipating the impact of AI on various industries and society as a whole.

1. AI in Everyday Life AI has become an integral part of our daily lives. Virtual assistants like Siri, Alexa, and Google Assistant use natural language processing to understand and respond to user queries. AI-powered recommendation systems on platforms like Netflix and Amazon enhance user experiences by suggesting content based on individual preferences.

2. Autonomous Vehicles Self-driving cars are one of the most exciting applications of AI. Companies like Tesla, Waymo, and Uber are developing autonomous vehicles that use AI to navigate and make real-time decisions. These advancements promise to revolutionize transportation, making it safer and more efficient.

3. Healthcare Innovations AI is transforming healthcare by improving diagnostics, treatment planning, and patient care. Machine learning algorithms analyze medical images to detect diseases like cancer at early stages. AI-powered tools assist doctors in making more accurate diagnoses and personalized treatment plans.

4. Ethical Considerations As AI becomes more pervasive, ethical concerns are gaining prominence. Issues such as bias in AI algorithms, data privacy, and the impact of automation on jobs are critical challenges. Ensuring fairness, transparency, and accountability in AI systems is essential for building trust and preventing harm.

5. AI and Creativity AI is making strides in creative fields, generating art, music, and literature. Tools like OpenAI’s GPT-3 can write coherent and contextually relevant text, while AI algorithms create unique artworks and compose music. These innovations are expanding the boundaries of creativity and collaboration between humans and machines.

6. Future Research Areas The future of AI research is focused on several key areas:

- Explainable AI (XAI): Developing AI systems that can explain their decisions and actions to humans, enhancing transparency and trust.

- General AI: Moving towards artificial general intelligence (AGI), where machines possess the ability to understand, learn, and apply knowledge across a wide range of tasks, similar to human intelligence.

- AI in Climate Change: Leveraging AI to address environmental challenges, such as predicting climate patterns, optimizing energy use, and developing sustainable practices.

Conclusion

The history and evolution of artificial intelligence is a testament to human ingenuity and the relentless pursuit of knowledge. From ancient philosophical musings to the sophisticated AI systems of today, the journey of AI has been marked by significant milestones, challenges, and breakthroughs.

Understanding the roots of AI helps us appreciate its current capabilities and anticipate its future potential. The development of expert systems in the 1980s, the rise of machine learning in the 1990s and 2000s, and the recent advancements in big data and deep learning have all contributed to making AI an integral part of our lives.

As we look to the future, it is essential to address the ethical considerations and societal impacts of AI. Ensuring fairness, transparency, and accountability in AI systems will be crucial for building trust and maximizing the benefits of this transformative technology.

By staying informed about the latest trends and future directions in AI, we can better prepare for the opportunities and challenges that lie ahead. The ongoing evolution of AI promises to bring about innovations that will continue to shape our world in profound ways.

FAQ on History and Evolution of AI

- What is the origin of artificial intelligence?

- AI’s roots trace back to ancient myths and early philosophical ideas about intelligent machines. Modern AI began in the 1950s with Alan Turing’s work and the Dartmouth Conference in 1956.

- What were the first AI programs?

- Early AI programs include the Logic Theorist (1955) and the General Problem Solver (1957), which aimed to mimic human problem-solving.

- What caused the AI winters?

- AI winters occurred in the 1970s and late 1980s due to overhyped expectations, limited computational power, and funding cuts, leading to reduced interest and progress.

- How did machine learning change AI?

- In the 1990s, machine learning introduced algorithms that allowed computers to learn from data, leading to significant advancements like IBM’s Deep Blue defeating Garry Kasparov in chess.

- What are the current trends in AI?

- Today’s AI focuses on deep learning, big data, and applications like virtual assistants and autonomous vehicles. Ethical considerations, such as bias and privacy, are also crucial.